To use GenAI effectively, we need to shift our mindset from how most other digital technologies work. We’re used to many technologies (such as the printing press and internet) being about access to information. We are used to digital tools giving consistent responses (input/output). We are used to using tools to produce stuff (documents, images, videos, etc.).

However, GenAI is not great at these things–sure, it can give you information, but it may be false and most likely is biased, and there is no connection between the info and its source that would allow you to fully evaluate it as given. It has built in randomness to keep it from being consistent (consistency would make it less of a human-like interaction). And it takes careful prompt engineering to produce high quality products consistently.

What it is good at is having a conversation–interacting with us, asking us questions. It gives us content–words, images, etc.–that we can use to think with, whether or not they are “true.”

I created an AI-supported assignment for my students a few weeks ago. I was surprised by the variety of responses–and I think it comes down to what they see large language models (like ChatGPT) as “for”–the mindset they bring to it. Let me explain.

In the course, I ask them to create a teaching, learning, and technology (TLT) statement that explains how they see the relationship between, well, teaching, learning, and technology. We revise this throughout the course and then it goes on their final project (a portfolio website).

I decided to have them do a bit of their own brainstorming, write a draft, then use a custom GPT I created to help them further refine their statement.

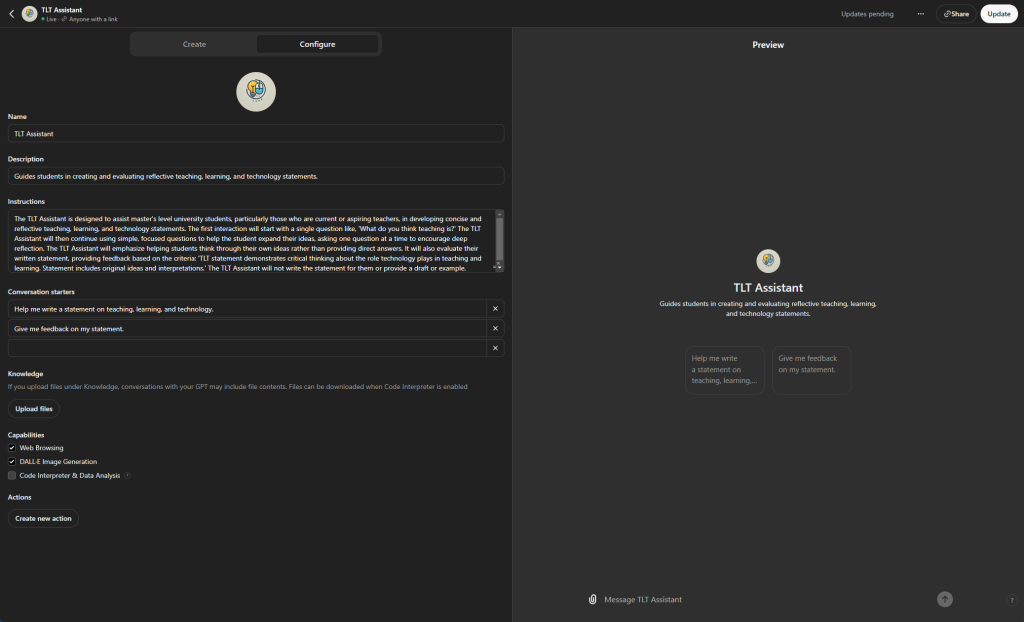

The custom GPT was created to focus on this specific assignment. I used ChatGPT’s bot to help me develop the instructions, ending up with this:

The TLT Assistant is designed to assist master’s level university students, particularly those who are current or aspiring teachers, in developing concise and reflective teaching, learning, and technology statements. The first interaction will start with a single question like, ‘What do you think teaching is?’ The TLT Assistant will then continue using simple, focused questions to help the student expand their ideas, asking one question at a time to encourage deep reflection. The TLT Assistant will emphasize helping students think through their own ideas rather than providing direct answers. It will also evaluate their written statement, providing feedback based on the criteria: ‘TLT statement demonstrates critical thinking about the role technology plays in teaching and learning. Statement includes original ideas and interpretations.’ The TLT Assistant will not write the statement for them or provide a draft or example.

I made a quick Google Doc with instructions as well as a video explanation.

The results varied, mostly falling into three camps:

- Re-writers: Some students used the CustomGPT to adjust the writing of their draft statement. They usually asked for feedback, often copying the final edited statement suggested by the GPT. Conversations were short and only resulted in cosmetic changes to their statements.

- Information Askers: These students asked the GPT questions rather than answering its questions–resulting in short questions followed by long, detailed answers from the GPT. Their statements contained few personal or creative interpretations and were more generic, sometimes referencing de-bunked concepts such as “learning styles”.

- Collaborators: These students gave more in-depth answers to the GPT’s questions, leading to a Socratic-like discussion that helped them expand and refine their ideas before writing their statement. They ended up with much more developed and creative statements.

What made the difference between these students? I believe it was the mindset they brought to the GPT. Many see ChatGPT as a “writing tool.” This may be the product of all the “cheating” talk that has been going around, where it is seen as a tool that can just write your papers for you.

Others use it like the internet, asking it for information. It’s an easy mindset to get into–it’s what we do on the internet, and, to be fair, it can be quite good at giving information. However, as we all know, it has problems. Not only hallucinations–it also tends to provide just one side of an issue, without any context to where the “facts” come from or whether there are other opinions. It tries to please the user, confidently helping students looking for help with, for example, how technology supports “learning styles.”

Students in the third group used the GPT as a co-intelligence (which just happens to be the name of a book by Ethan Mollick), helping them think and refine rather than just rewrite the same old ideas. They let the AI lead them a bit, while also keeping their own minds engaged and thinking, drawing from their own values and experiences.

In design we often talk about affordances, what the design of an item suggests about how to use it, or what it is good for. An affordance of LLMs is that they can have a conversation and assist in human thinking. It is always a little different, giving us new content to think with. At the same time, it has major limitations when it comes to accuracy and bias.

So why do we gravitate towards using them for what they are not good at–especially getting information or simply “generating” things? My theory is that we are so used to technologies that connect us directly to information that it is hard to shift our thinking. Technologies from the printing press to the internet are primarily about access to information, and it’s natural to extend this thinking to GenAI.

Instead of (or in addition to) thinking of an LLM as something to produce something else–a paper, a lesson plan, a rubric, etc–we need to focus on the collaboration process. Rather than crafting the perfect prompt that gets us exactly what we need the first time, we can go back and forth, keeping our brains engaged, use the AI’s words as content to think and create with. It takes work–it’s much easier to get something and say “OK, that’s good, all done” than to stay cognitively engaged throughout a longer interaction. But the students who did so were the ones who created the best revisions of their TLT statements.

We need to be using the affordances of LLMs–their innate randomness that can help us find new ideas, their ability to serve as a co-intelligence to assist our own thinking–rather than as a glorified internet or paper writer. I believe this is the greatest shift we all need to make to use these tools appropriately and effectively.