Today I had lots of fun with middle and high school students at the New Mexico Educators Rising State Conference in Albuquerque, New Mexico.

We did several activities that helped us think about how AI works–it predicts the next token based on its (biased) training data. it doesn’t “know” anything, it fills in the blanks. Here’s what we did:

Images, Bias, and Prompts

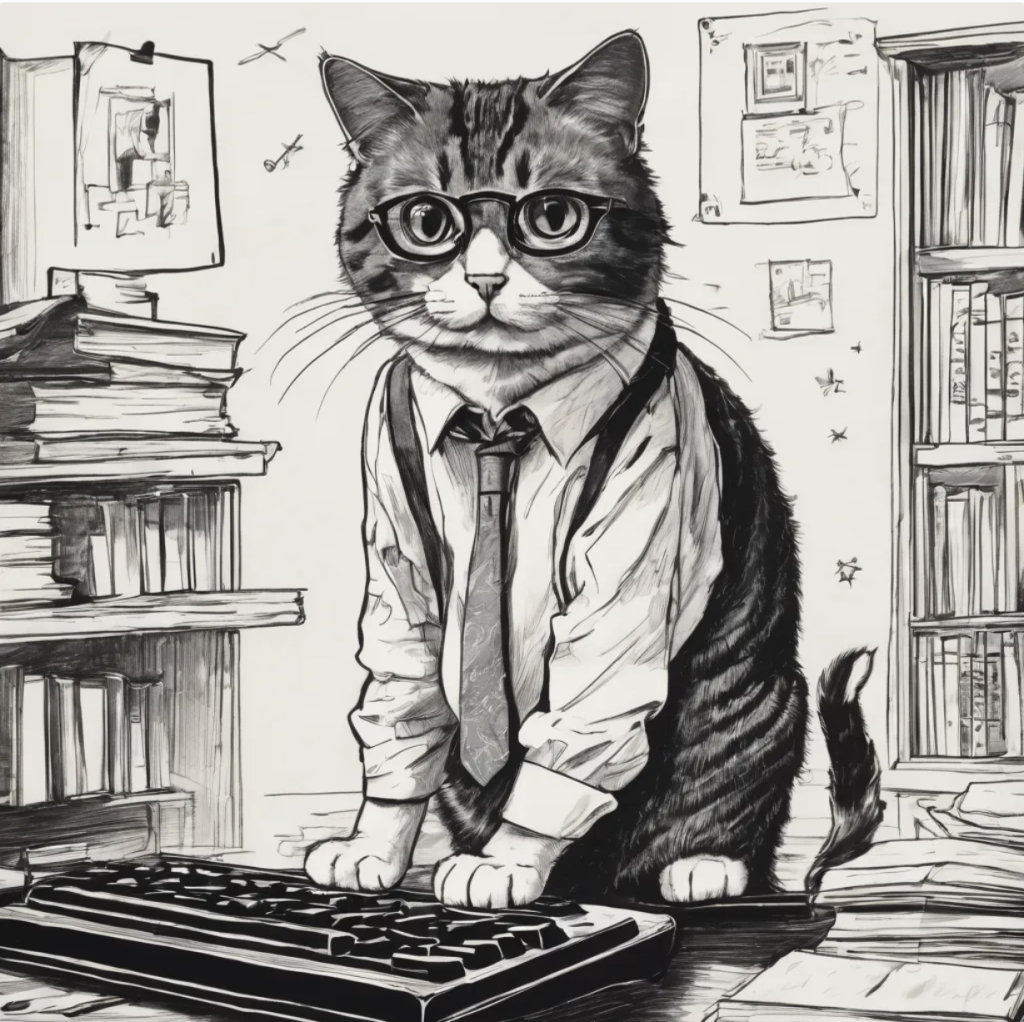

We created pictures that represented ourselves using Padlet’s AI image creator. For example, I asked it to create a “Frazzled cat pretending they are a professor standing on a keyboard trying to get through emails” (35 emails in my inbox since last night…) and got this:

We noticed that it gave me a tie (actually, all the picture options it gave me had a tie)–maybe because professors tend to be men.

We also noticed that the more description we could give it, the better the picture we got–something important to think about as we use AI.

Predicting the Next Token

Next, we talked about how Large Language Models work–that they predict the next word (or part of word) based on its training data. It’s training data only represents some people’s perspective and has human biases embedded in it. AI learns from people’s representations of their world, not from experience, so they don’t know anything–as Leon Furze says, “Chatbots don’t make sense. They make words.”

We experimented with a GPT I created that explores what the “five most common next tokens” of a phrase are. We experimented with phrases like “The best food is” and “The capital of New Mexico is” to see how it pulls from what a lot of people think, but not everyone–and how sometimes it’s right, sometimes it’s not.

AI Fills in the Blanks (based on its data)

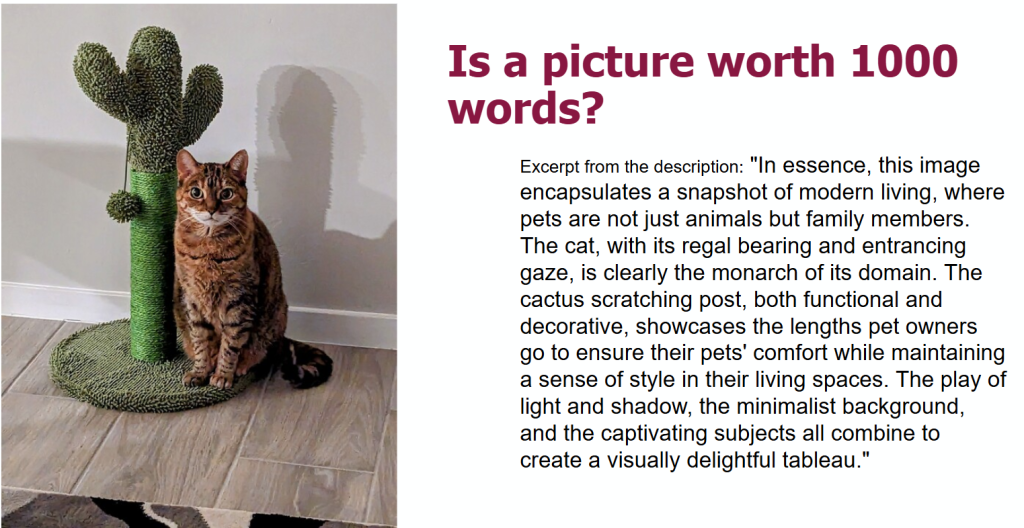

Next, we did my favorite image game (courtesy of Nicole Oster) where we:

- Ask an LLM for a 1000 word description based on a picture

- Copy the 1000 words

- Start a new chat and paste the 1000 words

- Ask for a new picture based on the 1000 words

Here’s an example:

We did one of the whole group and noticed how AI “fills in the blanks”–it decided that since we were in a conference room, it should add plants and cups of coffee on the tables and a whiteboard on the side–and turn us all into models!

AI is “messed up”: Optical Illusions and Bias

Next, we explored how AI explains (or doesn’t explain) optical illusions based on the work of Punya Mishra (see here). I also explained my bias studies using the example of student music interest and discussed how sensitive these models are to single words.

Summing It All Up: AI Songs

Finally, we wrote three sentences about what we learned today. They were:

“AI is messed up. AI is biased. AI doesn’t know anything.”

We asked ChatGPT for some rap lyrics based on this summary, then created a song (actually it created 2) using suno.com.

Enjoy:

These future educators were smart, creative, and attentive–I am so excited for the next generation of teachers!