Today, my colleague Dave Rutledge and I did an activity with our undergraduate educational technology class. We wanted to briefly discuss some equity issues as well as give them a chance to try out the new OpenAI image generator–and hopefully learn something along the way.

Content wise, we wanted to introduce them to the “digital divides” described in the US National Educational Technology Plan 2024. This plan goes beyond the common discussion of the “digital divide” (describing how some students who do and others do not have access to devices) to explore three types of digital divides:

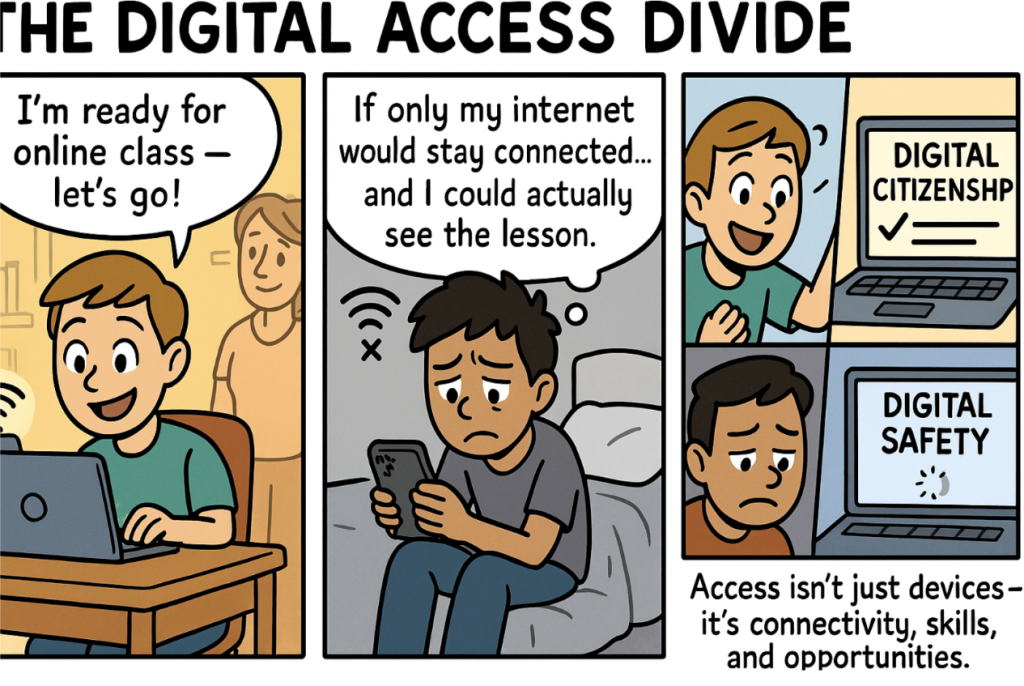

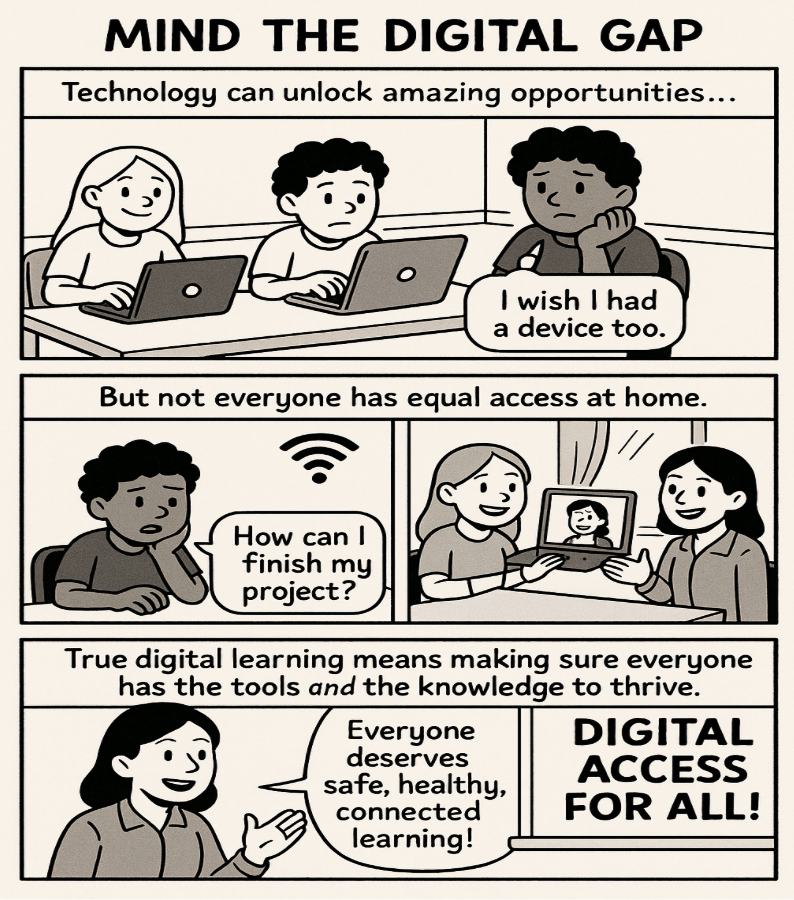

- Digital Access Divide: Inequitable access to digital tools, including access to instruction on digital citizenship, health, and safety.

- Digital Design Divide: Differential abilities for teachers to design technology-supported learning experiences for their students. This includes lack of time for professional development, time, and support.

- Digital Use Divide: Differences in how students use educational technology–some use the tools for critical and creative products (build, create, and analyze), others in more passive ways such as watching videos or direct instruction programs.

So. We had them:

- Review a summary of the US National Educational Technology Plan 2024

- Work with a group (and AI) to create a story that illustrates their assigned type of divide: digital access, digital design, or digital use

- Use AI to create a comic strip of their story and share

As Dave and I observed, we noticed that this AI-supported activity was more than just using AI to create something. It pushed students to think deeper not just about digital divides but also about AI, society, and themselves–and helped us, as teachers, to evaluate whether they were really “getting it”.

Here are some things that we noticed and discussed:

1. Digital Access Divide and Equity

The Digital Access Divide was the easy one. It’s closest to the traditional digital divide, and ChatGPT knew all about that!

But what made this interesting was looking a bit more closely at the pictures–especially the first two! The “have-not” kids had darker skin–and even in the first one a darker background–than the others. This opened up a conversation about equity and race–not just highlighting how it’s portrayed in AI, but inequity in society at large and the complexities of that.

We also discussed how some of them didn’t notice the differences at first, as if they were subliminal messages. We don’t want our world to continue this way, so we need to be aware of the image of “reality” AI is sending us–we need to critically evaluate what we are seeing so we can change it.

2. Uncovering Misunderstandings

This activity also let us go deeper into the content and what these digital divides are. Looking at the images produced by ChatGPT–and whether or not students were aware of what was and was not a representation of the assigned digital divide concept–provided us, as teachers, with insight into how the students were thinking. And how easy it is to just go along with what AI says. (note: I owe this approach Ethan Mollick, who described how AI can “eliminate the illusion of explanatory depth”.)

For example, the Digital Use Divide is not about access to devices–it is about how students use the devices. But ChatGPT (and our students) seemed to struggle to get over the “traditional,” access-focused digital divide.

Here’s an example–a comic by a group assigned to focus on the Digital Use Divide:

Although perhaps a creative take, the idea of a “device swap” is highlighting the devices–who has them and who doesn’t (with a little digital literacy thrown in at the end). This group’s decision to use this comic showed us that they might not quite understand the digital use divide.

If we asked them to create their own stories without AI, they might have done a bit better–but ChatGPT saw the words “digital divide” and gravitated towards the traditional concept. Without critical thinking–and good prompting skills–students would get the norm, and they had to really engage their own understandings, and their prompting skills, to fix it.

3. Refining Ideas

Other comics were much closer to the intended concepts–consider this on the Digital Design Divide:

It gets the basic idea, teachers struggle and need to learn to design with technologies. But the problems the teacher is having are technical–not the deeper pedagogical considerations we hope we are helping teachers consider.

Of course, technical skills go hand-in-hand with the design skills, and this comic does get to “co-design,” but it is stuck on a more traditional view of technology in teacher education–that teachers just need tech skills and they will be good to go.

Then we have this:

Our students had a lot of fun with ghost pictures here–but they also were able to get to something deeper. They got closer to the intention of the Digital Design Divide, moving away from “learning tools” to building creative learning experiences with technology.

Finally, the comics helped us address an issue that also might have led to misconceptions: most of the examples suggested the Digital Divide was a problem you could see within a single classroom. However, two of the comics moved this to a bit more of a systems level–the “Great Device Swap” above and the comic below looked at differences between schools. This helped us think about how you might not see the digital divide within your own classroom–that it is a systemic issue, more of a challenge between schools and districts than within classrooms.

Learning From ChatGPT

Ultimately, our activity opened up rich discussions that went beyond the typical definitions of digital divide. We were able to talk about how these ideas are seen in society, how they are normally envisioned, and how the new US Educational Technology Plan is different.

By producing stuff–in this case comics–and reflecting together, we deepened our understandings of:

- AI: it’s bias, it’s tendency to give the “normal” and make assumptions

- Ourselves: what we do and don’t notice about what we are getting from AI, what assumptions we make because AI made them

- Society: inequity, systems thinking, and the patterns represented in AI

I hope these types of experiences will help our future teachers think critically and creatively about technology and learning!