This week, I have the honor of presenting at the 3rd Annual Innovations in the Science of Teaching and Learning Conference in Durban, South Africa. My talk built on my work in bias in AI and critical pedagogy, but brought in some insight from Jeffrey Abbott and Andrew Maynard’s forthcoming book: AI and the Art of Being Human.

In this book, Abbott and Maynard discuss AI as a mirror–but “not merely passive mirrors that make us think about ourselves—they actively construct versions of us, reshaping how we see ourselves and proposing subtle identity shifts, moment by moment, interaction by interaction.” What does this mean for when we, particularly our kids, are interacting with an AI that stereotypes and generalizes? How do we keep it from erasing our complex and culturally unique traits?

When preparing my talk, I used a picture I took of the conference itself and asked AI to write a 1000 word description, then took that 1000 word description and asked for a new picture. This was the result:

The erasure of the richness of this conference–and the imposition of what AI thinks a conference looks like–is shocking.

Abbott and Maynard propose we need to learn to “See ourselves seeing the mirror”–develop the reflection and metacognition to understand the AI mirror, ourselves, and how the AI mirror impacts us.

At the conclusion of my talk, participants commented and asked questions about how to make the data more representative of their culture. They brought up the idea of purposefully injecting their images and ideas into the data sets, something I had never thought of before. I’m grateful for the chance to learn from the amazing people of South Africa!

Slides

Resources

Punya Mishra: punyamishra.com

Andrew Maynard: futureofbeinghuman.com (see more about his upcoming book here)

Ethan Mollick: oneusefulthing.org

AI Analysis Assignment

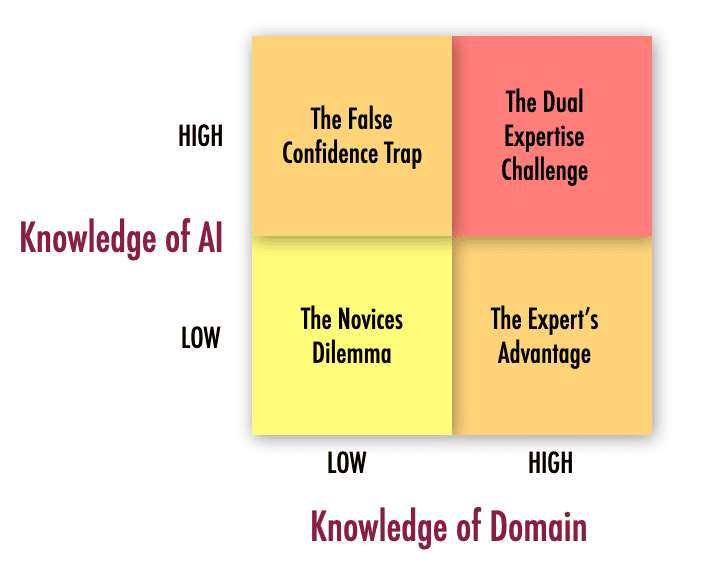

This assignment is an attempt to address the expertise paradox–how domain knowledge interacts with AI knowledge to impact how AI is used. It attempts to help learners gain experience on how expertise changes the way they interact with AI, potentially impacting how they understand AI in areas of lower expertise.

This assignment helps you practice analyzing AI-generated content in an area where you already have expertise. By starting with your own knowledge, you’ll practice identifying what AI gets right, wrong, or oversimplified—skills you can then apply when using AI for lesson planning or other educational tasks. You will also see if the things that are written by AI–whether they are correct or incorrect–helps you come up with new ideas of your own. This helps you start working with AI as a creative collaborator.

You’ll evaluate an AI response, question its limitations, and reflect on how your expertise shaped your analysis.

See an example of this assignment here.

Step 1: Generate Content

Choose a topic you have expertise or experience in. Ask an AI tool: “Tell me about [your topic].” Copy the AI response into a Google Doc.

Step 2: Color-Code the Response

Read through the AI response and highlight text using these colors:

- Green: Excellent – AI got this exactly right

- Yellow: Missing something – Accurate but incomplete

- Red: Wrong – AI got this incorrect

- Blue: My experience differs – AI’s answer might work for others, but my experience suggests something different

- Purple: This made me think of something new

Step 3: Add Comments

Choose 3-5 of your highlighted sections and add a comment explaining your thinking. What makes it excellent, wrong, incomplete, or different from your experience? What new ideas did it spark in your thinking?

Step 4: Reflection 1

Briefly answer these questions:

- What did the AI understand well about your topic?

- What would someone miss if they only learned from this AI response?

- How did your expertise help you evaluate this content?

Step 5: Push Back

Go back to the AI with 1-2 follow-up questions or prompts to address something you highlighted in yellow, red, or blue. For example: “You said X, but what about Y?” or “Can you add more nuance about Z?” Copy the AI’s new response into your document.

Step 6: Final Reflection

- How did the AI respond to your follow-up questions? Were you able to get better information by questioning its initial response?

- How did having expertise in this area impact how you evaluated the AI’s responses?