*Cover image of me learning with a computer in a fairy tale created (obviously) with AI…Adobe Firefly, to be exact.

I’ve been thinking a lot about what learning with generative AI is or could be. Is it different from other ways we learn? Does it call for a whole different theory of learning, or is it just making what we have always been doing more efficient and interactive?

I had a quite successful learning experience today…assisted by ChatGPT, and wonder what it means for these questions. Here’s what happened.

First, a challenge with being an academic who is shifting their research agenda is that there is a lot of new research to learn. This new research uses different vocabulary and writing styles, and to understand and participate in these new communities I have to acquire a large amount of background knowledge.

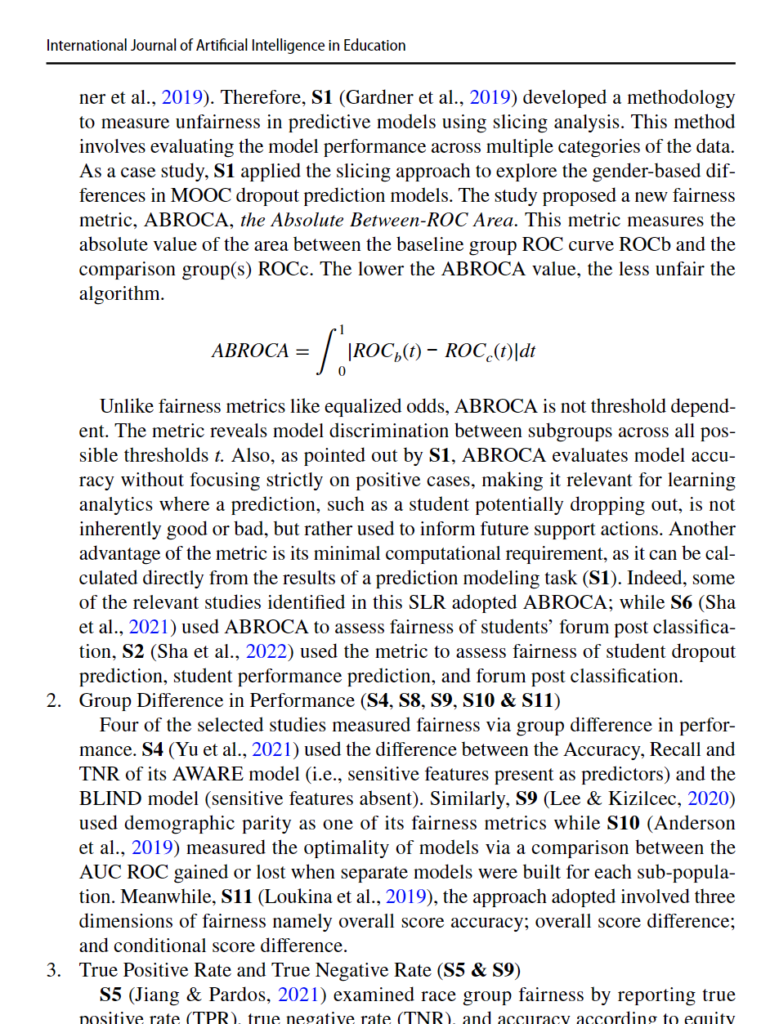

In my attempts to broaden my understanding of machine learning research, I was trying to better understand how fairness is studied and measured in machine learning. I got to this page:

I had no idea what most of these terms and ideas meant. So I turned to ChatGPT.

I started with just copying paragraphs of text and asking it to explain terms to me.

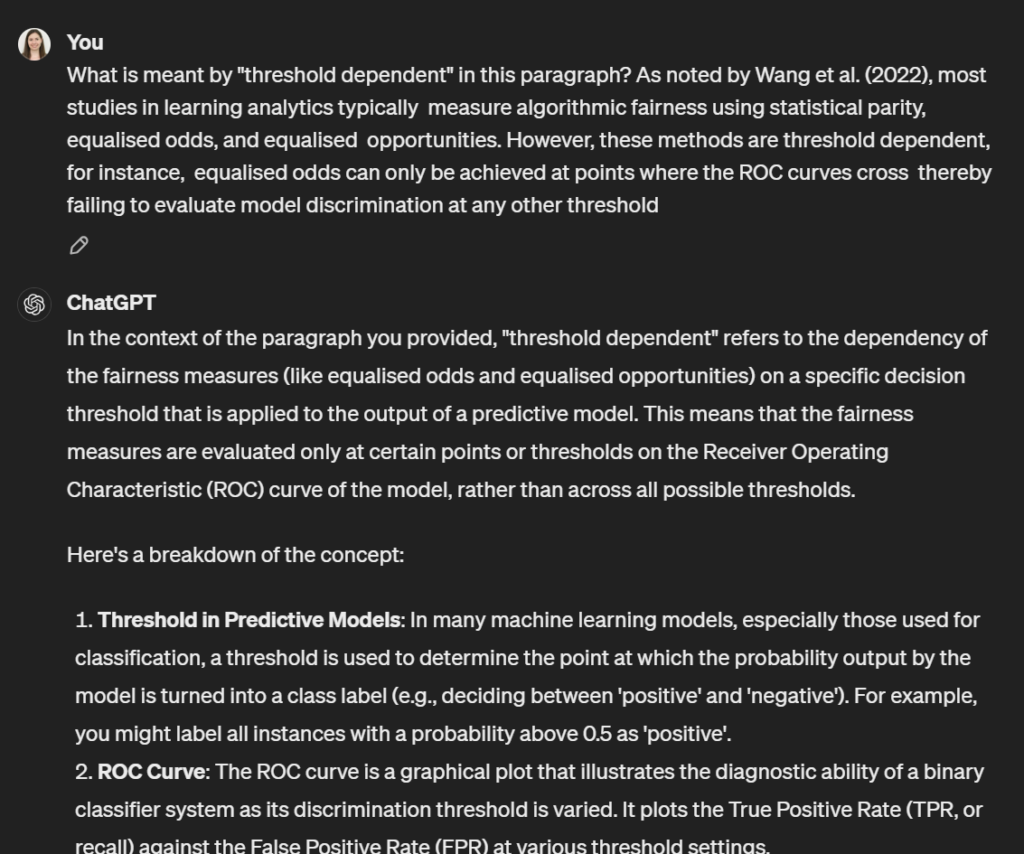

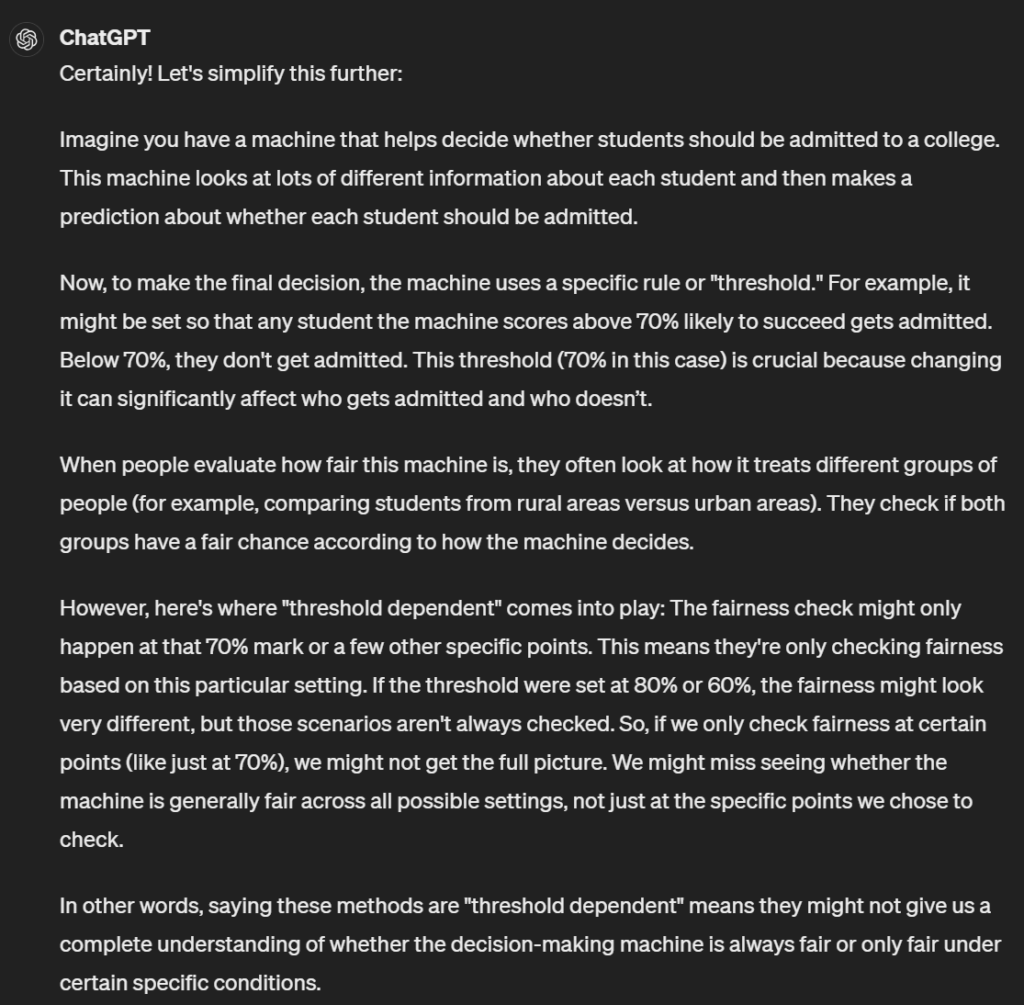

It gave me a definition with a bunch of stuff I still didn’t understand, so I asked for a simpler explanation: “Can you explain that for someone not familiar with machine learning?” (I always ask it if it can, I don’t want to be too bossy.)

It broke it down quite nicely:

I repeated the same process with the next part of the paragraph. I asked a lot of follow-up questions as I attempted to understand, and got helpful responses to each.

Making it Visual

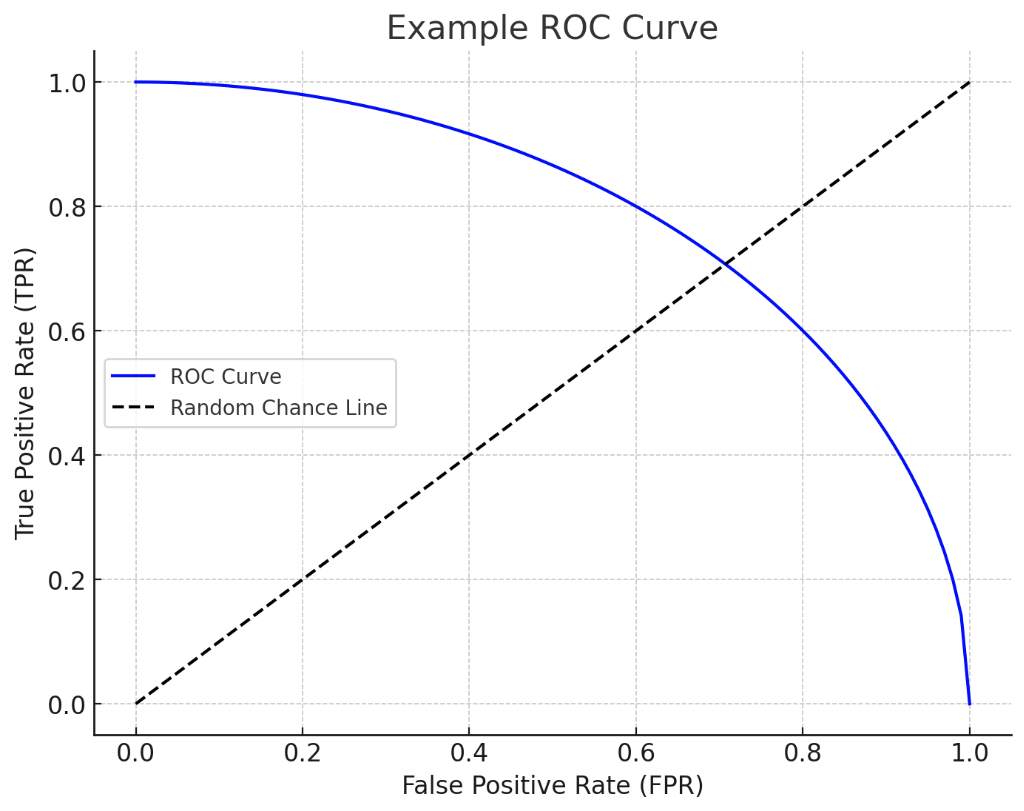

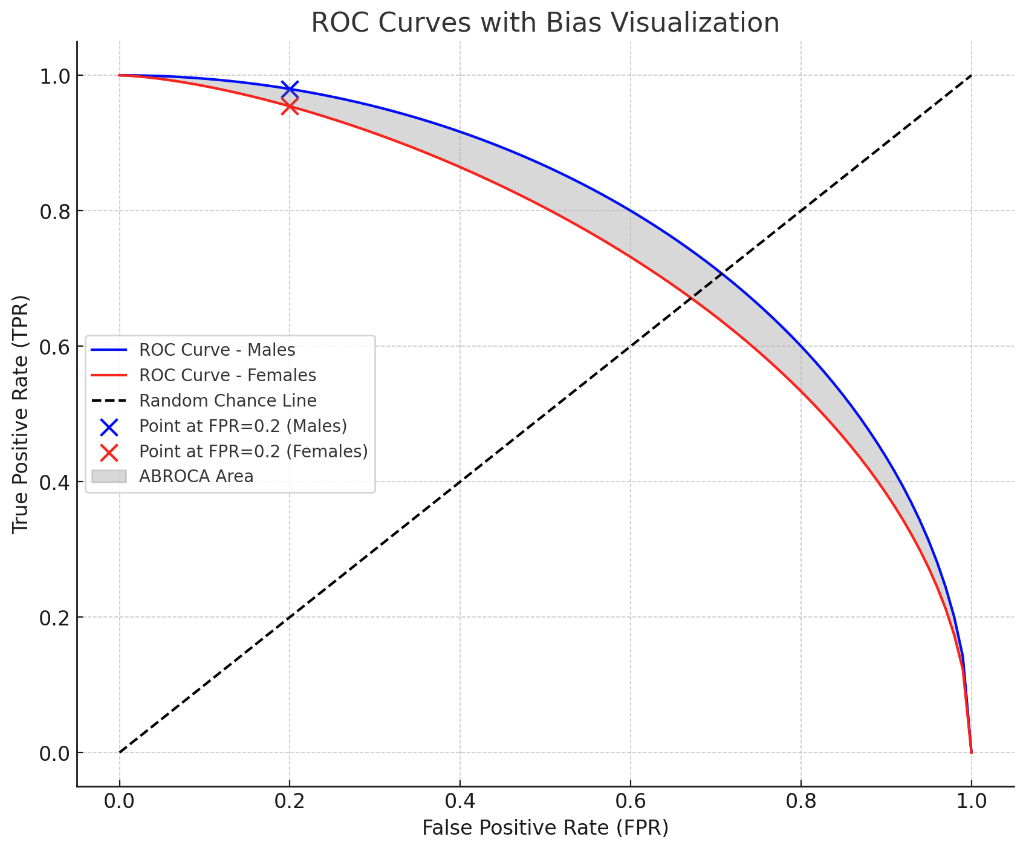

I thought it might help to visualize it, so I asked it to create a graph showing the concept (I asked it to use Python code because I didn’t want a Dall-E style graph!). It was quite nice:

But it didn’t really show the bias I was trying to understand, so I gradually asked to add more complexity, adding two groups, statistics, etc.

Making it Interactive

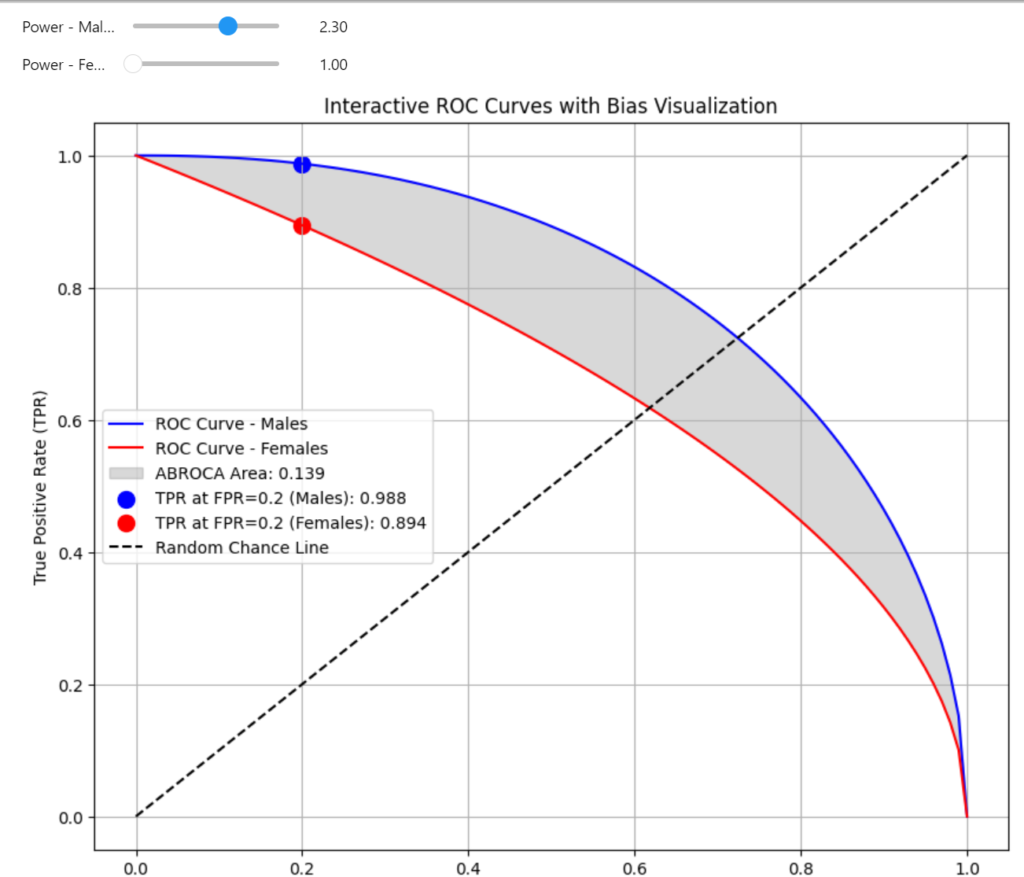

I have enough background knowledge (what we would call TPK, or technological-pedagogical knowledge) that I know that simulations can help illustrate relationships in complex concepts like this. So I asked for help creating an application.

And…it took a few steps. It gave me some nice initial code, but when I tried to run it, it didn’t work. I gave it a picture of what I got, and it informed me that I needed to run it in Jupyter. “I’m using Visual Code Studio, so what do I do?” I asked, and then it walked me through the steps to download the correct widgets and packages until I got it to work. The first widget was pretty cool, allowing me to use sliders to adjust the curves and see how it impacted the fairness measurements.

Next, I asked if there were other features that might help me understand the concept. It obliged, giving me a list of 10 features and new code that included some of those features.

Pedagogical Development

I thought it might be nice to create a whole series of widgets that broke down the elements and built on each other, including explanations and tool-tips. It really liked my idea!

All righty! So then I asked it to create it for me. And…it has some limitations. It really doesn’t want to give me the whole thing, no matter how many times I asked it would only give me the first 2 widgets. And I ran into some bugs that I have yet to fix. This could very easily be on my end, it might be something that isn’t set up yet in my computer.

Ultimately, I ran out of my quota of GPT4 prompts, so I’ll try again later.

Thoughts

- I am finding myself much more confident in my ability to tackle difficult concepts and tasks. Normally, I would have just moved on and figured I didn’t have enough background knowledge to understand the article. Instead, I jumped in and explored.

- Giving it an audience for its explanation–“explain this to someone without background in machine learning”–was incredibly effective. Most humans would have difficulty making this switch; we struggle to recognize what assumptions we make about what others know. But ChatGPT did this seemingly effortlessly.

- The ability to ask for clarification and test my assumptions is quite incredible.

- The multi-modal elements (image, interaction) enhanced my experience and deepened my understand.

But…

- I would have struggled with this if I didn’t have a fair amount of general background knowledge and confidence that I could figure it out

- I needed to have some insight into what I did and didn’t understand to ask the right questions

- My final project–creating the app–is quite difficult for GPT4. I believe if I kept working with it I could get there, but it will take more time; I will probably have to break it down and have it do one step at a time. However, I never would have even attempted this without it! And, as Ethan Mollick says “Consider this the worst AI you will ever use.”

Questions still linger, like what skills do I have that enable me to do this? Do I understand the concept as well as if I had an expert teach me? What does this mean for technology in teaching and learning?

Ultimately, I’m not sure if the core of my learning is really different. But it absolutely is more efficient and provides incredible “just-in-time” support. It feels a bit like a super power.

Pingback: Not for the Truth of the Matter – Capricious Connections