I can usually tell when my students have used AI in their work. Not because I’m trying to catch them, but because their writing suddenly becomes overly comprehensive. They add so many details, go so far beyond what I’m asking, that it’s like watching someone use a fire hose when they needed a garden spigot.

There’s so much conversation right now about students “cheating” with AI and teachers trying to detect it. I understand the concern, but I think we’re missing something more interesting. What my students’ AI use is revealing isn’t really about academic dishonesty – it’s about the skills we all need to develop.

The pattern I see is what I’ve started calling “overfitting” – borrowing the term from machine learning. Their papers hit every detail of the assignment in too much depth, add extra related ideas, and sound authoritative throughout. But somewhere in all that comprehensiveness, their actual thinking gets lost.

Here’s what I think is happening: students ask AI a question, get back a response that sounds smart and covers a lot of ground, and assume they’re done. They’re not deliberately trying to avoid thinking – they’re just accepting what looks like a complete, sophisticated answer. They don’t recognize that all this impressive-sounding content might not actually serve their real purpose.

This connects to what Punya Mishra calls the “expertise paradox” – students are caught in a double bind where they lack both the domain knowledge to evaluate AI outputs and the AI literacy to recognize when those outputs aren’t serving their thinking. They’re in what he describes as the most vulnerable position for AI use: unable to see what’s wrong with that comprehensive response and unaware of how it might be leading them astray. When you can’t recognize what’s broken, you can’t repair it.

Some might argue that this is simply because students aren’t skilled in prompt engineering – that if they knew better techniques, or could better define their problems, they’d get more targeted, useful responses. I don’t think that’s the real problem. As I’ve explored elsewhere (see here and here), I think our focus on prompting techniques misses how creative thinking actually works – through conversation, emergence, and iterative discovery.

This is exactly what Andrew Maynard captures in his demonstration of how students can engage productively with AI: through long, messy conversations where ideas develop iteratively, where curiosity builds on itself, where real insights emerge not from that first comprehensive response but through sustained pushing back and wrestling with possibilities. His simulation shows thinking in motion – a student who starts wanting to “speed through an assignment” but gets drawn deeper into genuine inquiry, discovering insights through the conversation itself rather than accepting polished outputs.

This connects to something I’ve been learning in my own AI use. I’ve discovered that AI is incredibly good at helping me realize what I don’t want to say. It gives me comprehensive responses that, on the surface, sound great, and I have to learn to push against them, to recognize when something doesn’t actually advance my thinking. The real skill is editorial – knowing what to push against, what to deepen, and what to cut away.

My students are struggling with the same thing, just from the other direction. They’re getting seduced by comprehensiveness instead of learning to distill down to what they actually want to explore. It’s the same principle I use when I force them to write something really short – not because short is inherently better, but because constraints help them figure out their main ideas.

When students just accept that first comprehensive AI response, they’re short-circuiting their own discovery process. Original questions and solutions emerge together through conversation and iteration. You start with some curiosity, explore it, discover what you’re really trying to figure out, and let that guide you toward insights you couldn’t have anticipated. As I discovered in my classroom, AI tools often default to generic, “normal” responses – it takes genuine engagement and critical thinking to push past these defaults toward something more meaningful.

Maybe the real issue isn’t AI literacy in the technical sense. Maybe it’s developing the capacity to sit with uncertainty, to recognize when we’re being given generic wisdom instead of genuine insight, and use our own (developing) expertise to see what isn’t good, what’s generic, and what could be changed.

But here’s the thing: I can’t teach what I haven’t grappled with myself. We’re all figuring out how these tools work in practice, how they help or hinder our thinking processes. The models keep changing, our understanding keeps evolving. Instead of trying to “catch” students using AI inappropriately, we can help them recognize when their AI use is serving their thinking versus when it’s just producing impressive-looking but generic content.

The question isn’t whether something is technically accurate or original. It’s whether it’s helping us think, whether it’s moving us toward insights we actually care about, whether it feels insightful or just comprehensive.

Everyone is learning right now. And maybe that shared uncertainty is exactly what we need to have better conversations about thinking, creativity, and what we’re really trying to accomplish when we write.

Note: My writing of this blogpost is a bit of an example of these ideas. I have been thinking about this stuff, and having conversations about it (with people and AI), but it’s hard to find time to blog enough these days. I am trying to use AI more in my blogging to help me get the basic text out, but struggle to avoid the “overfitting.”

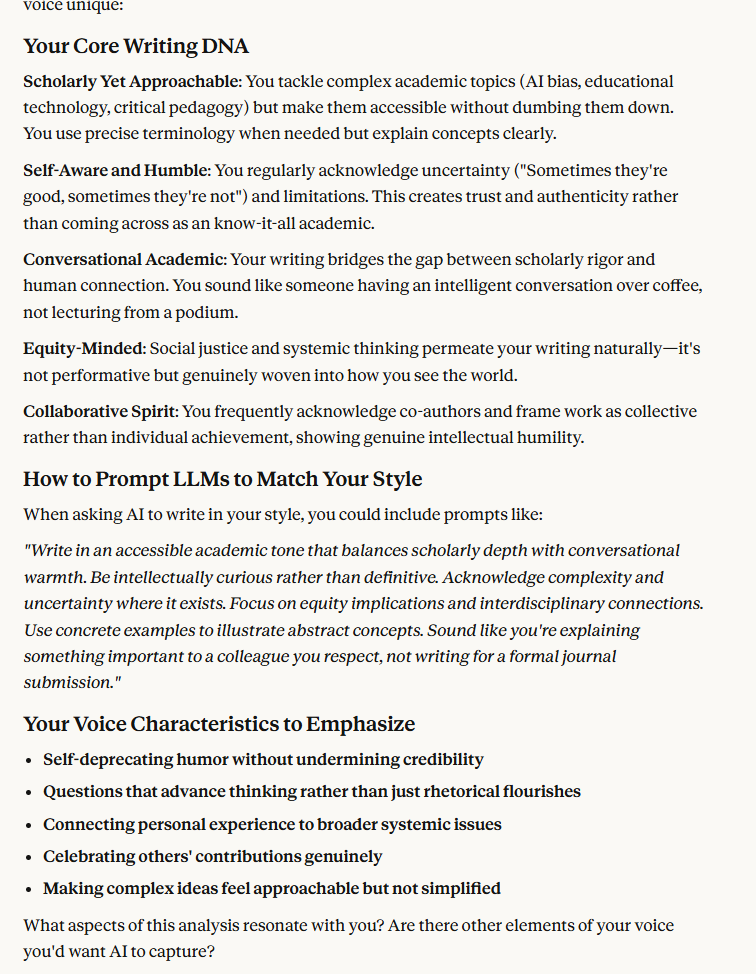

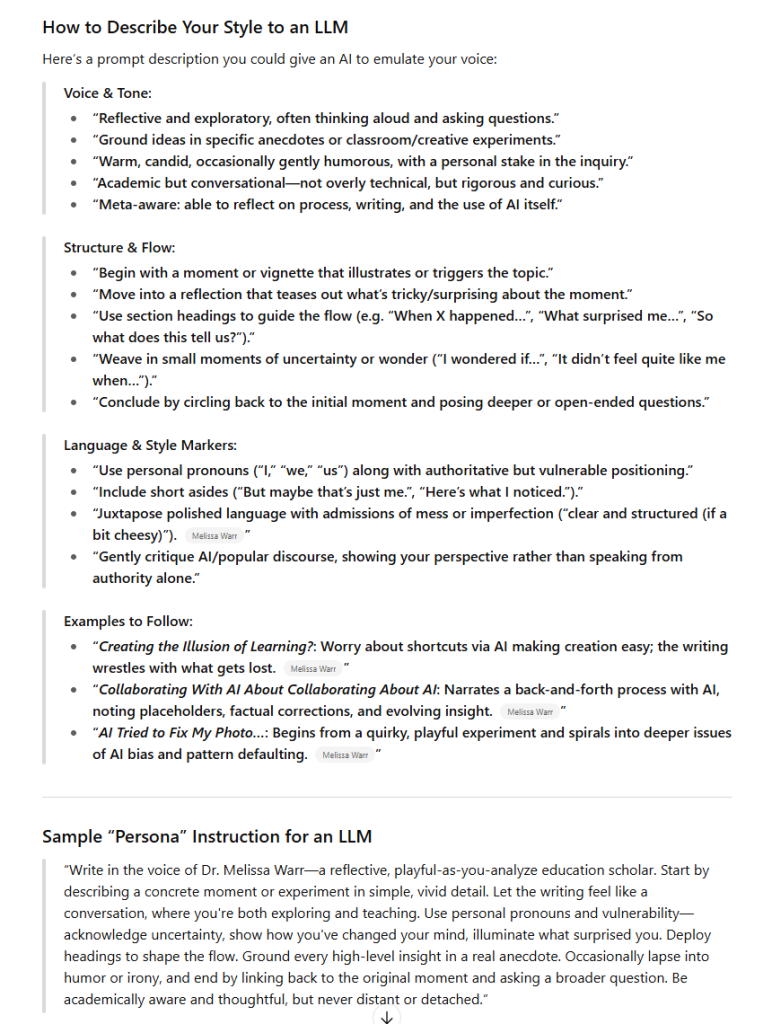

For example, I asked Claude and ChatGPT to analyze my blog writing style and write a prompt that will help an LLM write in that style (see below). For this post, I gave Claude this prompt: “Write in a warm, conversational academic tone. Be intellectually honest about complexity and uncertainty. Sound natural and engaging, like explaining something important to a colleague you respect.”. The result, above, is still overfitting the “complexity and uncertainty” style, even with quite a bit of my editing.

And, honestly, I do what they do when I’m creating blog post images–I don’t care enough or have enough expertise to go beyond generic AI-generated images. So there’s that.

Key point: we’re all learning.