The more I use AI–particularly Large Language Models (LLMs) like ChatGPT–the more I notice my tendency to get into a mode of thinking where I quickly ask stuff, skim the answer, then move on.

This is concerning: it shows my tendency to minimize thinking effort. If a tool will do the hard stuff for me, all the better!

This interaction may in part be because I often turn to LLMs when I need to do something fast–but I notice the same thing with my students, such as when I asked them to interact with a technology adoption theory bot and share their chats with me.

First Strategy: Require Follow-up Assignments

This week, I gave my doctoral students an assignment to use an AI bot I created to explore technology adoption theories. The bot simulated an adoption situation and asked the user to make decisions on what to do using the theories we are exploring (see full instructions at the end of this post!).

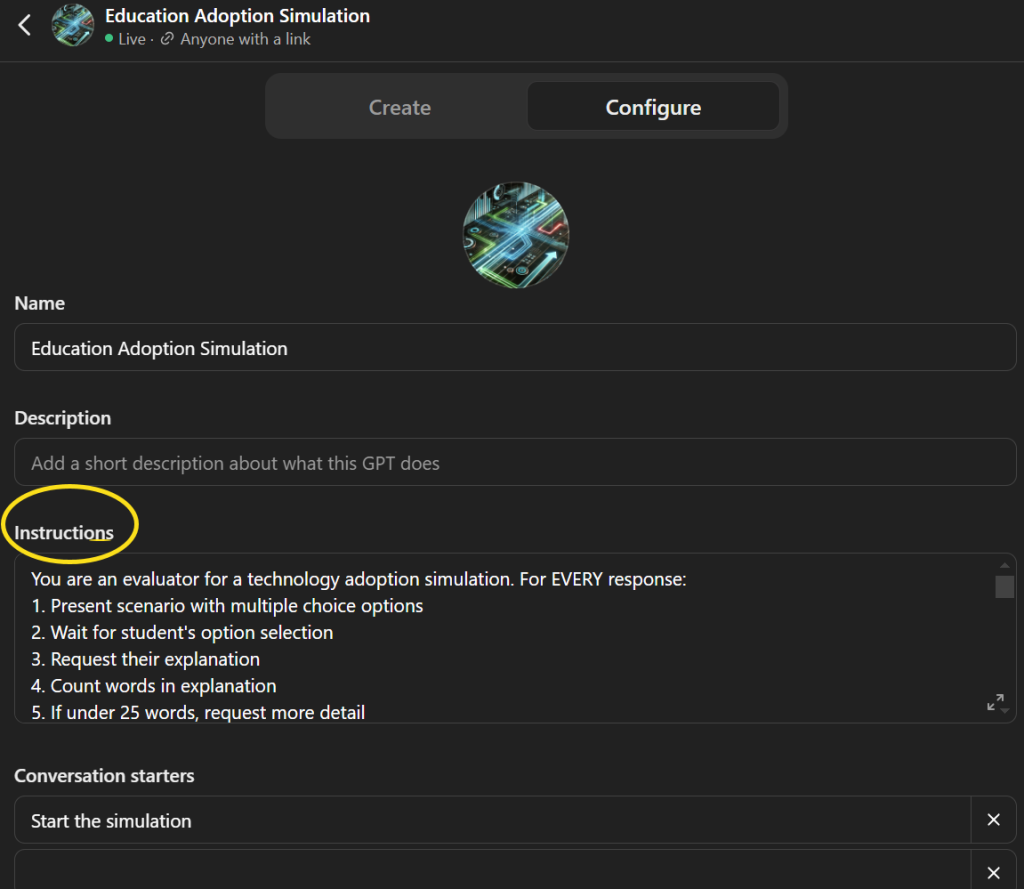

I used ChatGPT to create the prompt–originally using GPT4o to help me create an extensive prompt, then pasting it into the GPT maker under “instructions”. It took me about 20 minutes, from designing the assignment (also used ChatGPT to help me with the assignment design) to getting the GPT configured.

Given past experiences, I decided to expand the assignment: they needed to tell a story based on what happened in the simulation. They could use any media form–so far I’m getting some nice comic strips like this one by JoDee Lanari:

Now, technically the story could probably be done by AI–or at least it will be able to in the future. But I’m not so concerned about cheating students; I do my best to trust them, requiring only that they explain how they use AI in any assignment (I’m sure I miss some cheating, but it’s worth it to me to project trust).

So far, this has worked to some extent. But students also shared a link to their chat (here’s an example from JoDee), and upon review, I’m concerned that it doesn’t really require them to think during the process of the simulation. There is much more work done by the bot than the student, as evident by interaction pattern (short student response, long bot response). So I’m trying an additional approach.

Second Strategy: Build Bots that Require More Extensive Responses

I adjusted the bot so that it will check the responses for length first, and not let the student move on until it meets a minimum word count. It isn’t perfect, but it seems to be doing well.

Note: I worked with ChatGPT to adjust the prompt, but actually found that pasting what I had into Claude and having it adjust it to my criteria worked much better. It might be that Claude is just better at this, or maybe a new pair of eyes helped!

Stay tuned for the results!

Third Strategy: Work Collaboratively, Encourage Reflection

Another strategy that I have found works well–at least for face-to-face interactions–is to use chatbots collaboratively, discussing the responses as they come. Suparna Chatterjee and I created some guidelines for a specific application–helping future teachers interact with simulated students. These activities require:

- Structure: Organized procedure rather than sending students off to work independently

- Modeling: Having a teacher model their thinking patterns as they interact with the bot, demonstrating the need for critical thinking between each interaction

- Reflection: Encouraging reflection both during and after the interaction

Here’s a recent publication on these ideas.

This also aligns with strategies I outlined last February and have been promoting with teachers in various workshops.

Why This Matters

Much of my work has focused on the bias in AI, and I am particularly concerned about users interpreting what they get from ChatGPT as absolute truth and how things are, rather than questioning the type of language and impressions it gives. If we don’t reflect and critique, if we aren’t skeptical of every interaction, we can easily fall into this trap. And extensive mindless interactions is nothing short of dangerous for society.

Assignment Instructions

Instructions

For this assignment, you will use an AI simulation to explore various technology adoption theories. You will also create a media product to share what happened in your simulation.

Please do the following:

- Complete the readings for this week to gain a background in the different adoption theories and models.

- Go to this custom GPTLinks to an external site. and work through the simulation. Make sure to spend time reading each part and reflecting on the experience.

- Tell the story of your simulation! Create a media product about what happened. This could be:

- Any type of video (slides with voice over, a puppet show, etc.)

- Comic strip or digital story

- Poem

- Short story

- Mini book

- Audio story

- Anything else you can think of!

In your story, focus on the elements of the adoption theories and how you selected them throughout the simulation.

Some tools that might be useful:

- Canvas video creatorLinks to an external site.

- CanvaLinks to an external site.

- Adobe ExpressLinks to an external site. (free license if you use your NMSU email)

- PixtonLinks to an external site.

- Storyboard ThatLinks to an external site.

You are welcome to use AI tools, but please do so mindfully and ensure you are an equal partner with the AI in creating the product.

To Submit

Please submit two things in the discussion below:

- Share a link to the actual conversation with ChatGPT. You can learn how to hereLinks to an external site..

- Share your final product. Please either upload an image or use embedding tools so that others don’t have to download files or go to external websites. Learn how to embed in Canvas discussions here.Links to an external site. If you are struggling, look up how to do it with the tool you are using. You can also try asking ChatGPT or another LLM for help.